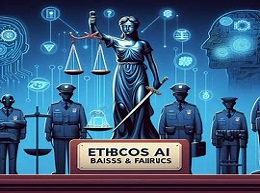

The Ethics of AI in Criminal Justice: Bias and Fairness

Navigating the Intersection of AI and Justice

As artificial intelligence (AI) increasingly permeates the criminal justice system, questions of ethics and fairness come to the forefront. While AI promises efficiency and accuracy, it also brings the risk of perpetuating biases and inequities. In this article, we delve into the ethical considerations surrounding AI in criminal justice, focusing on bias mitigation and ensuring fairness in decision-making processes.

Understanding AI in Criminal Justice

Role of AI in Decision Making

AI systems are employed in various facets of the criminal justice process, including risk assessment, sentencing recommendations, and parole determinations. These systems analyze large datasets to predict outcomes and inform decisions made by judges, prosecutors, and parole boards.

Example: COMPAS Risk Assessment Tool

The COMPAS (Correctional Offender Management Profiling for Alternative Sanctions) risk assessment tool is widely used in the U.S. criminal justice system to predict a defendant's likelihood of reoffending. However, concerns have been raised about its fairness and accuracy, as studies have shown disparities in risk scores across racial and socioeconomic groups.

Identifying Bias in AI Algorithms

Types of Bias

AI algorithms can exhibit various forms of bias, including racial bias, gender bias, and socioeconomic bias. These biases may stem from historical data, algorithmic design choices, or systemic inequalities within the criminal justice system.

Example: Racial Bias in Predictive Policing

Predictive policing algorithms have been criticized for disproportionately targeting minority communities, as they rely on historical crime data that reflect biases in policing practices, leading to over-policing and unjust treatment of marginalized groups.

Mitigating Bias in AI Systems

Data Collection and Preprocessing

Ensuring diverse and representative datasets is essential for mitigating bias in AI systems. Data collection methods should be transparent, and preprocessing techniques such as data anonymization and feature selection can help mitigate biases inherent in the data.

Algorithmic Fairness Measures

Developing fairness-aware algorithms that explicitly account for fairness constraints and minimize disparate impacts on protected groups is crucial. Techniques such as fairness-aware learning and adversarial debiasing aim to promote fairness while maintaining model performance.

Example: ProPublica's Analysis of COMPAS

ProPublica conducted an investigation into the COMPAS risk assessment tool's fairness by analyzing its predictions and outcomes across racial groups. The study found evidence of racial bias, prompting calls for transparency and accountability in algorithmic decision-making.

Ensuring Transparency and Accountability

Algorithm Transparency

Promoting transparency in AI systems allows stakeholders to understand how decisions are made and identify potential biases or errors. Providing explanations for algorithmic decisions and disclosing model performance metrics enhances accountability and trust in AI systems.

Example: Right to Explanation

The European Union's General Data Protection Regulation (GDPR) includes provisions for the "right to explanation," requiring organizations to provide individuals with explanations of automated decisions that affect them, including those made by AI systems.

Ethical Considerations and Challenges

Balancing Objectivity and Fairness

Striking a balance between objectivity and fairness is challenging, as AI systems may prioritize accuracy at the expense of fairness or vice versa. Ethical considerations require careful deliberation to ensure that AI systems uphold principles of justice and equity.

Example: Pretrial Risk Assessment

Pretrial risk assessment tools aim to predict a defendant's risk of flight or reoffending, but concerns have been raised about their impact on bail decisions and the potential for exacerbating disparities in pretrial detention rates.

Future Directions and Opportunities

Ethical AI Design Principles

Developing ethical AI design principles that prioritize fairness, transparency, and accountability can guide the development and deployment of AI systems in criminal justice settings. Collaboration between technologists, policymakers, and legal experts is essential to establish ethical frameworks and guidelines for AI governance.

Example: Fairness by Design

Fairness by design approaches integrate fairness considerations into the entire AI development lifecycle, from data collection and algorithm design to deployment and evaluation. By proactively addressing bias and fairness concerns, organizations can build more equitable AI systems that uphold the principles of justice and fairness.

Striving for Ethical AI in Criminal Justice

As AI continues to play a prominent role in the criminal justice system, addressing bias and fairness concerns is paramount to ensure equitable outcomes for all individuals involved. By adopting transparent, accountable, and fairness-aware AI practices, we can navigate the ethical complexities of AI in criminal justice and work towards a system that upholds the principles of justice, fairness, and equality.